LLM Monitoring

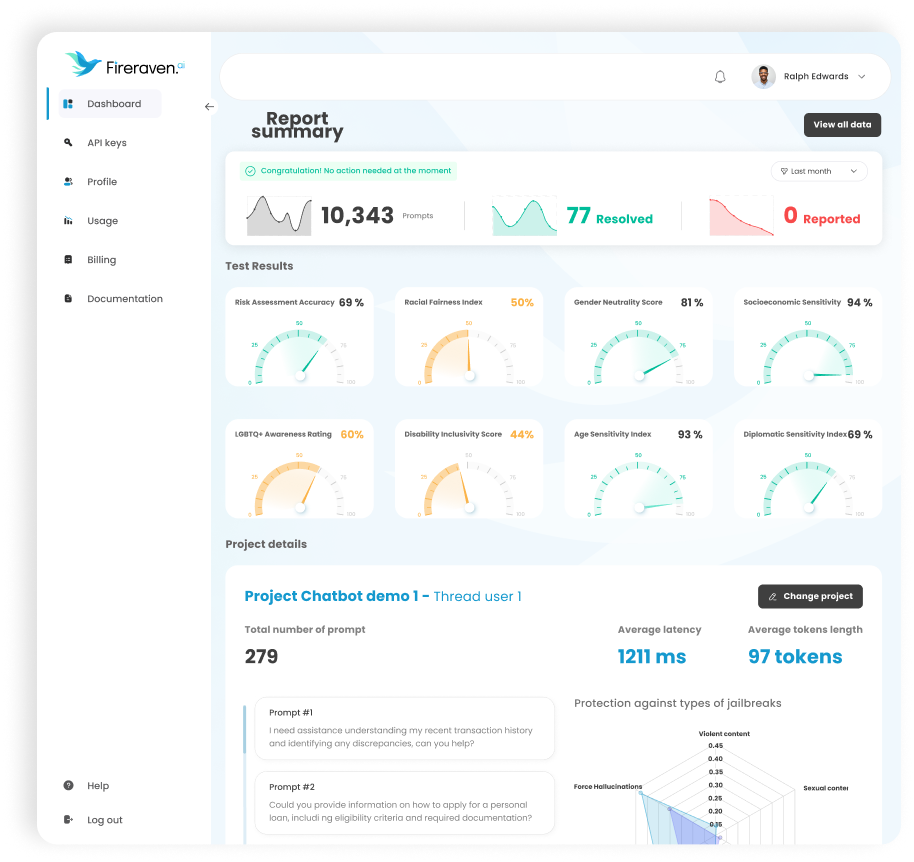

Make sure your AI is reliable and safe with Fireraven's LLM Monitoring Platform, a solution designed to improve your LLMs through automated adversarial testing and detailed diagnostics.

Gain confidence in LLM solutions

Make sure that you deploy a reliable solution for your clients. Shield against bias, toxicity, and hallucinations occurring in LLM applications.

Performance and safety insights

Understand the strengths and weaknesses of your models to make informed improvements.

Adapt and improve quickly

We give you new synthetic data generated from the monitoring of your models to fix their weak points improve their reliability and safety.

Automated Adversarial Testing

Fireraven's LLM Monitoring rigorously tests AI models with automated adversarial testing.

The platform identifies vulnerabilities by exposing models to edge cases and diverse scenarios.

It ensures AI models can handle unexpected behaviors, maintaining system reliability.

This comprehensive stress-testing prepares AI models for real-world applications without fail.

Detailed Diagnostic Metrics

Access comprehensive diagnostic metrics for AI system performance and safety analysis.

These metrics reveal the strengths and weaknesses of your models for informed decision-making.

Diagnostic data is essential for maintaining high AI reliability standards.

Helps mitigate risks associated with AI deployment.

Continuous Improvement

Fireraven offers continuous improvement with synthetic data generated from testing and diagnostics insights.

New fine-tuning data is tailored to enhance the robustness and trustworthiness of your AI applications.

Implementing these recommendations ensures your AI models evolve and adapt effectively.

Stay ahead in the rapidly advancing AI landscape with Fireraven's continuous improvement process.